- ISC2 Community

- :

- Discussions

- :

- Tech Talk

- :

- Generative AI and Cybersecurity in a State of Fear...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

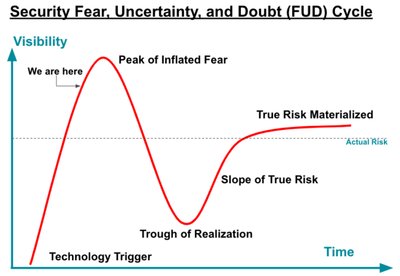

Generative AI and Cybersecurity in a State of Fear, Uncertainty and Doubt

.

An article written by Jason Rebholz , Corvus Insurance CISO. Worth the read.

Thoughts on where you think we are on the slope?

d

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On a side note there’s a good chance the markets will go mental.*

*This is not advice grade investment nonsense and if followed for anything at all you’ll lose all your money, complain to me and I’ll say “Told you so.”

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

"We are here" is about right.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting. Arguably, the cycle reflects the delay between proof of concept (the fear stage) and something in the wild where we can truly ascertain the impact.

But I find the article makes some sweeping assumptions off the bat when it says organizations are using AI to "gain efficiencies" among other goals. I haven't seen it yet. The reality is many technology adoptions, especially early ones, are incredibly inefficient when you add up acquisition cost, training, and loss of productivity as new processes come online. It's the same math as the FUD cycle, just using excitement, reality, rethinking (ERR if you will).

What many technology adoption adoptions are about is competitive advantage; if we don't have this we'll lose market share. Often that reflects the risk of the business plan (we need to dominate the market, and if we don't we're Pets.com). However, it also translates to security risk as dollars and people get diverted to chasing shiny pricey things rather than ensuring quality of operations. It turns into this "build it and then security it" approach, and I think we are seeing that with AI.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Before diving into all of this let's understand this in very simple language.

So, Generative AI acts like a smart computer program, that creates things by itself. Such as -writing stories, making images etc.

Let's understand cyber security - It is all about keeping our computers safe from bad things, like - hackers and viruses.

Talk about a state of fear, uncertainty, and doubt - Sometimes people are afraid of unsure and unnecessary things. Like, their computer might get attacked and much more.

So, when we talk about "Generative AI and Cybersecurity in a State of Fear, Uncertainty, and Doubt," it means that people are concerned about how these smart computer programs might be used to do harmful things or make our online world less secure.

It's like worrying about the safety of our digital world because we're not sure how these smart programs might be misused.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Soniya-01 You obviously have a talent for simplifying subjects for a wider non-technical audience.

However, often we find that new technologies bypass people's attention or those who are motivated jump feet first and understand the implications later on down the track.

Often we find ourselves in situations that means we have to fully immerse and understand the subject, to the point we can publicly present or lecture on the subject. Often this means having to do courses or immerse oneself in Linear Algebra from our school days for instance.

Or we actively participate in groups such as https://thealliance.ai/

All of which adds to our CPE requirements, but the main factor it motivates ourselves to be able to communicate the subject to our peers and organisations at their levels, so they understand the business benefits and to identify the real vs the fake information surrounding it too.

Regards

Caute_Cautim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Soniya-01 wrote:

It's like worrying about the safety of our digital world because we're not sure how these smart programs might be misused.

We need to worry about these and other programs and how they may affect our digital world. I firmly believe that if one does not worry, they are like the ostriches in So. Africa that bury their heads in the sand.

On the positive side, AI can be used to automate duties/repetitive tasks, it can assist in decision-making or also assist in the creation of new technologies. However, on the other side, it can and will open many vulnerabilities unless governed properly.

d

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@dcontesti Indeed, embrace it, understand it, and use it appropriately to counteract against attackers, who will certainly being using it to act against organisations or even poison it with Shadow AI too.

Regards

Caute_Cautim